LLMs Aren’t Thinking — They’re Predicting. And That’s Why Active Models Matter for AGI

We’ve reached a strange moment in AI where the world is both mesmerized and terrified by large language models. They write code, draft emails, pass exams, and even feel conversationally “smart.” But beneath the surface, every LLM—no matter how powerful—shares one fundamental truth:

👉 LLMs are next-token predictors.

They are not thinking. They are not reasoning. They are not forming new ideas.

They’re simply selecting the statistically most likely next word based on what they’ve seen during training.

This is not a limitation of scale. It’s a limitation of architecture.

Why This Matters

If a model can only remix what we’ve trained it on, then:

- It cannot originate ideas outside its training distribution.

- It cannot identify “holes” in its own knowledge.

- It cannot guide its own learning or adapt its strategy.

- It cannot meaningfully reason about the unseen—it can only hallucinate.

And that last point is the real Achilles heel.

Hallucinations aren’t bugs; they are structural consequences.

Predict the next token without understanding the world?

You will invent details when the data runs out.

That’s not intelligence. That’s autocomplete at scale.

Are LLMs Dangerous? Yes — but Not Because They’re Malicious

LLMs don’t wake up one morning and decide to mislead us.

They don’t have wants, intentions, or goals unless we explicitly train them to act as if they do.

They are dangerous in the same way an extremely confident intern can be dangerous:

they answer everything—especially the things they don’t understand.

Malice isn’t the threat.

Misalignment and misuse are.

The Real Path to AGI: Active Models

If we want systems that go beyond prediction—systems that reason, infer, explore, and learn—we need architectures built for exactly that.

Enter Active Models.

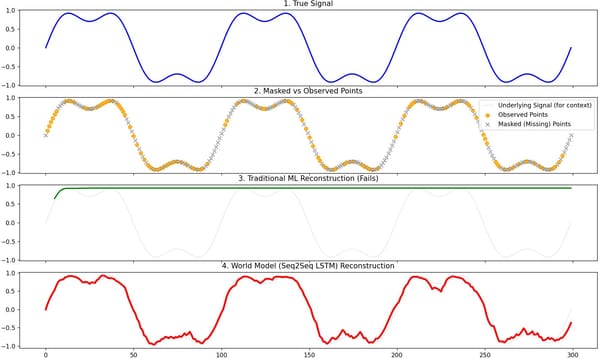

Active Models flip the script:

🔹 They don’t just respond — they explore.

They actively identify gaps in their understanding.

🔹 They don’t just hallucinate when information is missing — they infer.

They build latent representations that model the actual underlying world, not just the text describing it.

🔹 They don’t wait for humans to re-train them — they self-train.

They can propose their own learning objectives, evaluate their own uncertainty, and create experiments to fill it.

This is how real intelligence works.

Humans don’t learn because someone gave us the right dataset.

We learn because we notice what we don’t know—and we go figure it out.

That is the leap from passive prediction to active reasoning.

This Is Why Active Models Are the Key to AGI

If AGI is ever going to emerge, it won’t come from scaling token prediction to infinity.

It will come from architectures that:

- form hypotheses

- test predictions

- model unseen possibilities

- refine themselves based on gaps

- build causal understanding

- generate knowledge, not just text

Active Models mirror how humans operate in an incomplete world:

given fragments of data, we postulate, reason, and refine.

We don’t hallucinate—we infer.

True intelligence isn’t about generating the next word.

It’s about generating the next idea.

LLMs Took Us Far. Active Models Take Us Further.

LLMs changed the world by showing what prediction alone can achieve.

But prediction is not enough to reach AGI.

The next frontier isn’t bigger models.

It’s models that can think, question, and grow.

That’s why the future belongs to Active Models —

systems that don’t just produce information,

but can reason about what’s missing and teach themselves what comes next.

And that is much closer to real intelligence than anything we’ve built so far.