LLMs Are Smarter Than Any PhD — So Why Aren’t They Intelligent?

Every week we see claims that large language models are approaching “superhuman intelligence.” They pass bar exams, ace medical questions, summarize research, and explain quantum physics better than most professors.

In terms of raw cognitive output, LLMs already exceed the capabilities of:

- medical specialists

- legal scholars

- research scientists

- mathematicians

- historians

If intelligence were simply the accumulation and retrieval of knowledge, we’d already be living with AGI.

But here’s the paradox:

👉 A newborn baby has more true general intelligence than the most powerful LLM on Earth.

Not more facts.

Not more reasoning steps.

Not more test-taking ability.

But more intelligence.

Why?

Because human intelligence doesn’t emerge from knowledge.

It emerges from architecture.

**Humans Have Two Capabilities LLMs Don’t:

A Subconscious Layer and a Reset Button**

1. The Subconscious: The Engine of Emergence

A baby isn’t “smart,” but their mind self-organizes:

- identifying patterns

- forming expectations

- predicting outcomes

- modeling physics

- learning social cues

All without being told a single fact.

This is subconscious emergence — a parallel engine of meaning-making that doesn’t rely on pre-existing data.

LLMs have no subconscious.

They have no bottom layer that builds understanding.

They only operate at the surface: predicting the next token.

That is intelligence through imitation, not emergence.

Humans possess all of the modeling, pattern identification, prediction outcomes as LLM's, and yet there is an override option, serving this up as a recommendation, not a requirement. The conscious mind can and does act different to the subconscious models. This leads to evolutionary leaps of boundary breaking.

2. The Reset Button: Innovation Through Partial Inheritance

Every human starts with:

- a blank slate of experiences

- base instincts

- minimal built-in structure

We don’t inherit our parents’ knowledge.

We don’t get a 300-page weight file injected into our brain.

And that’s precisely what allows new forms of intelligence to emerge.

The lack of inherited experience is a feature, not a bug.

It is the engine of novelty.

An LLM inherits everything and resets nothing.

It is a memory accumulator, not an innovation generator.

This is why a child can create a new idea, but an LLM can only remix existing ones.

Why “IQ Scaling” Cannot Produce AGI

If IQ is the ability to solve problems and manipulate known information, then LLMs already have superhuman IQ.

But IQ is not the source of general intelligence in humans.

Think about it:

- The person with the lowest IQ still forms a self-model.

- They still build causal understanding of the world.

- They still infer missing information in ways no LLM can.

- They still possess agency, intention, and adaptive learning.

A human with minimal formal reasoning still has more general intelligence than an infinite-memory machine.

Because general intelligence is not about how much you know.

It’s about how you learn when you don’t know.

LLMs do not learn when they don’t know — they hallucinate.

Humans infer, test, explore, and reconstruct.

That’s emergent intelligence.

We Can’t “Think Harder” Our Way to AGI

Scaling token prediction won’t produce an agent that:

- forms goals

- seeks new knowledge

- evaluates uncertainty

- builds its own instructions

- discovers structure in the world

- reasons beyond its priors

We cannot IQ our way to AGI any more than we can memorize our way to wisdom.

AGI will emerge when we give machines what babies have:

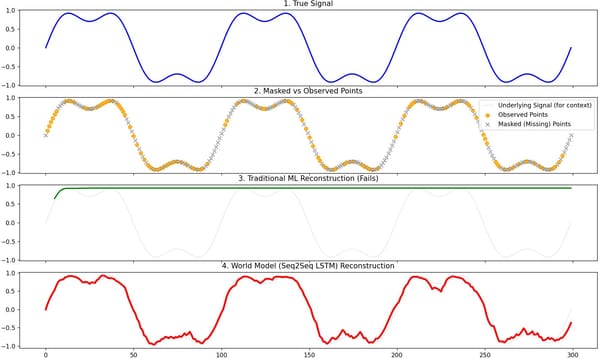

A subconscious world-model

that continuously organizes experience.

A reset mechanism

that creates novelty through partial inheritance,

not endless accumulation.

Only then will models stop being encyclopedias and start becoming minds.

**LLMs Already Master Knowledge.

AGI Will Master Understanding.**

LLMs show us how far intelligence goes when scaled.

But humans show us how intelligence emerges when structured correctly.

The future belongs to systems that don’t just know things,

but can discover, infer, and reinvent — even from a blank slate.

Because that is what real general intelligence looks like.